Here are some examples of what our customers do:

Your data model can always be expanded to accommodate additional data sources and to fulfill additional reporting needs, without additional complexity. This makes your solution future-proof and virtually limitless.

The dataFaktory ensures that the raw data from the different sources are stored in their original state, creating a set of data at each loading operation. With this building of historical records, you can always look back into the past and compare your current situation with previous states. Trends can be drawn from the last months or compared to the previous year(s), thereby the door to predictive analysis which enables better-informed decision-making.

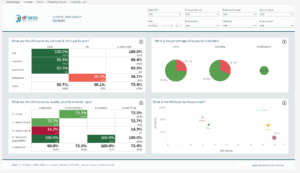

Your data has a Data Quality Score of 79%.

Data quality is often mentioned by our clients but is difficult to objectivise. dFakto brings a real answer with our Data Quality Score.

Additionally to the data vault architecture in which data controls, data enrichments and business rules (calculations,…)are applied separately from the raw data, the dataFaktory includes a data quality measurement mechanism, with which the quality of a specific data or a full data source can be summarized in one percentage, the Data Quality Score. This unique feature enables you to compare data quality in time and set quality improvement objectives.

New reports and dashboards created in hours, turning data into information.

Qualified data, once controlled and enriched accordingly, are gathered into “information marts” (a set of qualified data gathered for a specific purpose and/or specific data consumers), ensuring easy and secure access for further uses of the data. Data can then be turned into decisional information through reports and dashboards, and into user information via portals or websites.

New information marts can be set up for new purposes and stakeholders, providing flexibility without limits.

Flawlessly turning data into information at high-frequency.

The dataFaktory organises the uploading of raw data from various sources; the application of enrichment and business rules onto those data; and their combination in reporting-ready data sets. All these workflows are schedules, then automatically executed, delivering ready-to-use qualified information, at the required frequency (near real-time; hourly; daily; monthly…)

With the dataFaktory, no need for manual, time-consuming and error-prone consolidation of data from different systems and excel files.

Furthermore, our open, techno-agnostic architecture enables the dFaktory to run on any database (SQL Server, PostgreSQL, Oracle,…), in any environment (AWS, Azure, OVHCloud,…), using any reporting tools (Power BI, Tableau,…). You keep building on your organisation’s existing technological investments and skills!